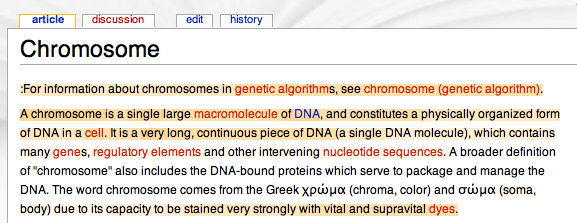

Look at that: the words in white were written by Wikipedia authors whose contributions to the encyclopedia tend to be “preserved, or built-upon”, while the words in orange were written by authors whose contributions tend to be deleted, reverted or edited. Does that help you read the encyclopedia?

The screenshot comes from the UCSC Wiki Lab’s The Wikipedia Trust Coloring Demo, which I saw linked from Steve Rubel’s . Basically, they downloaded a dump of the Wikipedia in February and have been analysing the data, aiming to come up with a precise “reputation” figure for any contributor based on the extent to which his or her contributions are accepted or revised. The demo only has a selection of pages, and they’re not editable, but certainly provides an interesting demonstration of how such a system could work.

Browsing through the demo, I’m not sure that this would really help me read the Wikipedia. I think I might find it more useful to see the level of dissent over a particular phrase or sentence in an article. For instance, I’d like to see recent additions or phrases that have been haggled over and changed back and forwards marked in red. Sentences that have stayed the same, or perhaps even better, been honed over time with a series of small changes that only affect grammar or ordering, well, they’d seem more trustworthy so should perhaps be green, or white, if we follow the colours used in this demo.

This demo would be excellent to show students to trigger a discussion of how trustworthy the Wikipedia is, and what trustworthiness might mean.

Related

Discover more from Jill Walker Rettberg

Subscribe to get the latest posts sent to your email.

PART

Don’t you trust me?…

From Jill Walker’s blog I came across a really interesting project which is trying to visualise “trust” in wikipedia. Basically, they scraped some of Wikipedia and run a program which coloured in authors’ contributions based on how long their edits…

Wikipedia links « scribblingwoman2

[…] Aug 8th, 2007 by Miriam Jones The Wikipedia Trust Coloring Demo: software that colour-codes text in Wikipedia entries according to the perceived reliability of the editors (from jill/txt, who suggests it might be useful in the classroom). Jimbo Wales signs on. […]